Creating a commit with multiple files to Github with JS on the web

My site is entirely static. It’s built with Hugo and hosted with Zeit. I’m pretty happy with the setup, I get near instant builds and super fast CDN’d content delivery and I can do all the things that I need to because I don’t have to manage any state. I’ve created a simple UI for this site and also my podcast creator that enables me to quickly post new content to my statically hosted site.

Screen Recorder: recording microphone and the desktop audio at the same time — ⭐

I have a goal of building the worlds simplest screen recording software and I’ve been slowly noodling around on the project for the last couple of months (I mean really slowly).

In previous posts I had got the screen recording and a voice overlay by futzing about with the streams from all the input sources. One area of frustration though was that I could not work out how to get the audio from the desktop and overlay the audio from the speaker. I finally worked out how to do it.

Firstly, getDisplayMedia in Chrome now allows audio capture, there seems like an odd oversight in the Spec in that it did not allow you to specify audio: true in the function call, now you can.

const audio = audioToggle.checked || false;

desktopStream = await navigator.mediaDevices.getDisplayMedia({ video:true, audio: audio });

Secondly, I had originally thought that by creating two tracks in the audio stream I would be able to get what I wanted, however I learnt that Chrome’s MediaRecorder API can only output one track, and 2nd, it wouldn’t have worked anyway because tracks are like the DVD mutliple audio tracks in that only one can play at a time.

The solution is probably simple to a lot of people, but it was new to me: Use Web Audio.

It turns out that WebAudio API has createMediaStreamSource and createMediaStreamDestination, both of which are API’s needed to solve the problem. The createMediaStreamSource can take streams from my desktop audio and microphone, and by connecting the two together into the object created by createMediaStreamDestination it gives me the ability to pipe this one stream into the MediaRecorder API.

const mergeAudioStreams = (desktopStream, voiceStream) => {

const context = new AudioContext();

// Create a couple of sources

const source1 = context.createMediaStreamSource(desktopStream);

const source2 = context.createMediaStreamSource(voiceStream);

const destination = context.createMediaStreamDestination();

const desktopGain = context.createGain();

const voiceGain = context.createGain();

desktopGain.gain.value = 0.7;

voiceGain.gain.value = 0.7;

source1.connect(desktopGain).connect(destination);

// Connect source2

source2.connect(voiceGain).connect(destination);

return destination.stream.getAudioTracks();

};

Simples.

The full code can be found on my glitch, and the demo can be found here: https://screen-record-voice.glitch.me/

Extracting text from an image: Experiments with Shape Detection — ⭐

I had a little down time after Google IO and I wanted to scratch a long-term itch I’ve had. I just want to be able to copy text that is held inside images in the browser. That is all. I think it would be a neat feature for everyone.

It’s not easy to add functionality directly into Chrome, but I know I can take advantage of the intent system on Android and I can now do that with the Web (or at least Chrome on Android).

Two new additions to the web platform - Share Target Level 2 (or as I like to call it File Share) and the TextDetector in the Shape Detection API - have allowed me to build a utility that I can Share images to and get the text held inside them.

The basic implementation is relatively straight forwards, you create a Share Target and a handler in the Service Worker, and then once you have the image that the user has shared you run the TextDetector on it.

The Share Target API allows your web application to be part of the native sharing sub-system, and in this case you can now register to handle all image/* types by declaring it inside your Web App Manifest as follows.

"share_target": {

"action": "/index.html",

"method": "POST",

"enctype": "multipart/form-data",

"params": {

"files": [

{

"name": "file",

"accept": ["image/*"]

}

]

}

}

When your PWA is installed then you will see it in all the places where you share images from as follows:

The Share Target API treats sharing files like a form post. When the file is shared to the Web App the service worker is activated the fetch handler is invoked with the file data. The data is now inside the Service Worker but I need it in the current window so that I can process it, the service knows which window invoked the request, so you can easily target the client and send it the data.

self.addEventListener('fetch', event => {

if (event.request.method === 'POST') {

event.respondWith(Response.redirect('/index.html'));

event.waitUntil(async function () {

const data = await event.request.formData();

const client = await self.clients.get(event.resultingClientId || event.clientId);

const file = data.get('file');

client.postMessage({ file, action: 'load-image' });

}());

return;

}

...

...

}

Once the image is in the user interface, I then process it with the text detection API.

navigator.serviceWorker.onmessage = (event) => {

const file = event.data.file;

const imgEl = document.getElementById('img');

const outputEl = document.getElementById('output');

const objUrl = URL.createObjectURL(file);

imgEl.src = objUrl;

imgEl.onload = () => {

const texts = await textDetector.detect(imgEl);

texts.forEach(text => {

const textEl = document.createElement('p');

textEl.textContent = text.rawValue;

outputEl.appendChild(textEl);

});

};

...

};

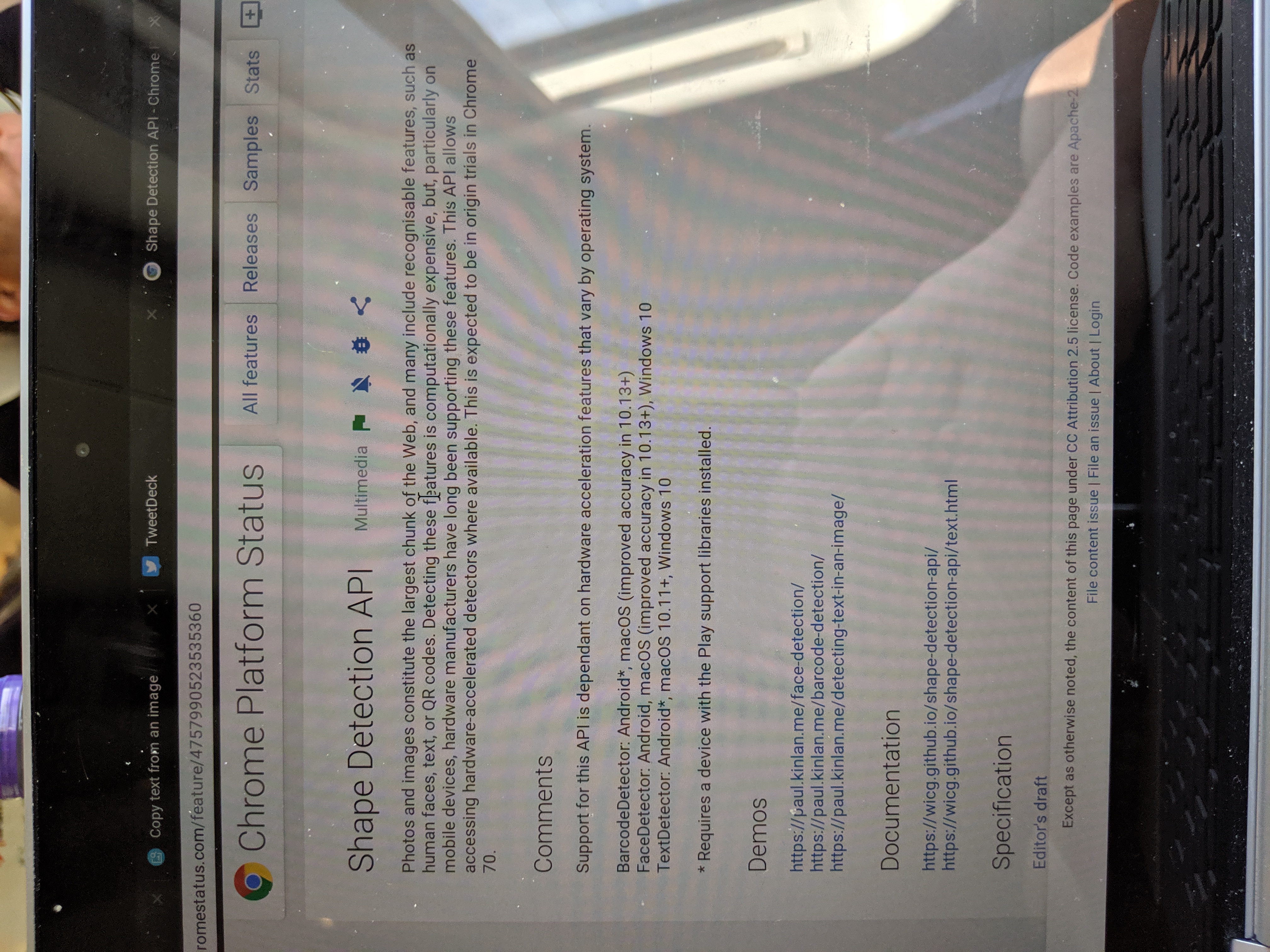

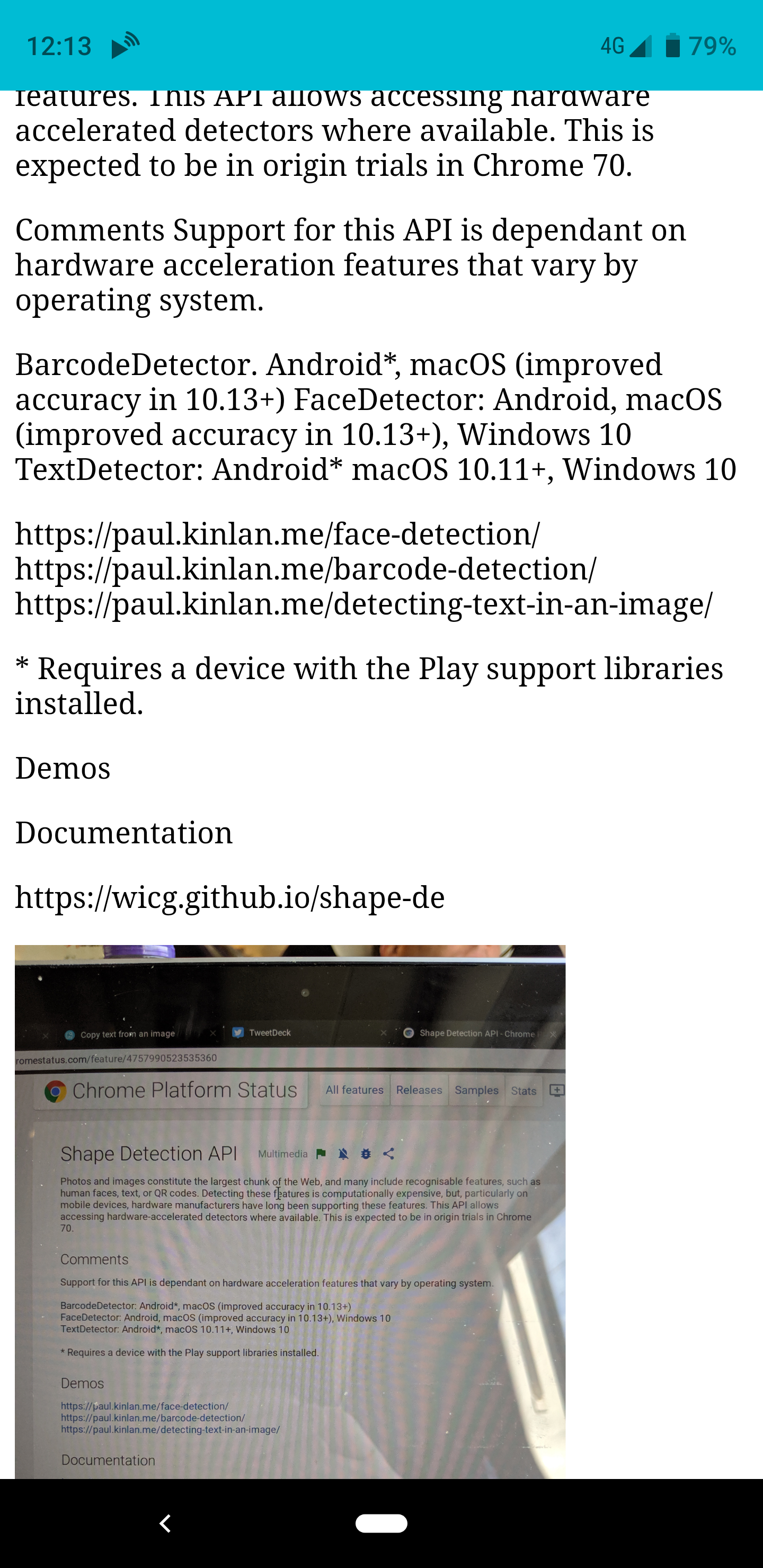

The biggest issue is that the browser doesn’t naturally rotate the image (as you can see below), and the Shape Detection API needs the text to be in the correct reading orientation.

I used the rather easy to use EXIF-Js library to detect the rotation and then do some basic canvas manipulation to re-orientate the image.

EXIF.getData(imgEl, async function() {

// http://sylvana.net/jpegcrop/exif_orientation.html

const orientation = EXIF.getTag(this, 'Orientation');

const [width, height] = (orientation > 4)

? [ imgEl.naturalWidth, imgEl.naturalHeight ]

: [ imgEl.naturalHeight, imgEl.naturalWidth ];

canvas.width = width;

canvas.height = height;

const context = canvas.getContext('2d');

// We have to get the correct orientation for the image

// See also https://stackoverflow.com/questions/20600800/js-client-side-exif-orientation-rotate-and-mirror-jpeg-images

switch(orientation) {

case 2: context.transform(-1, 0, 0, 1, width, 0); break;

case 3: context.transform(-1, 0, 0, -1, width, height); break;

case 4: context.transform(1, 0, 0, -1, 0, height); break;

case 5: context.transform(0, 1, 1, 0, 0, 0); break;

case 6: context.transform(0, 1, -1, 0, height, 0); break;

case 7: context.transform(0, -1, -1, 0, height, width); break;

case 8: context.transform(0, -1, 1, 0, 0, width); break;

}

context.drawImage(imgEl, 0, 0);

}

And Voila, if you share an image to the app it will rotate the image and then analyse it returning the output of the text that it has found.

It was incredibly fun to create this little experiment, and it has been immediately useful for me. It does however, highlight the inconsistency of the web platform. These API’s are not available in all browsers, they are not even available in all version of Chrome - this means that as I write this article Chrome OS, I can’t use the app, but at the same time, when I can use it… OMG, so cool.

Wood Carving found in Engakuji Shrine near Kamakura

Sakura

I’m told that more specifically that this is ‘Yaezakura’

Debugging Web Pages on the Nokia 8110 with KaiOS using Chrome OS

This post is a continuation of the post on debugging a KaiOS device with Web IDE, but instead of using macOS, you can now use Chrome OS (m75) with Crostini. I’m cribbing from the KaiOS Environment Setup which is a good start, but not enough for getting going with Chrome OS and Crostini. Below is the rough guide that I followed. Make sure that you are using at least Chrome OS m75 (currently dev channel as of April 15th), then:

New WebKit Features in Safari 12.1 | WebKit — ⭐

Big updates for the latest Safari!

I thought that this was a pretty huge announcement, and the opposite of Google which a while ago said that Google Pay Lib is the recommend way to implement payments… I mean, it’s not a million miles away, Google Pay is built on top of Payment Request, but it’s not PR first.

Payment Request is now the recommended way to pay implement Apple Pay on the web.

And my favourite feature given my history with Web Intents.

Web Share API

The Web Share API adds navigator.share(), a promise-based API developers can use to invoke a native sharing dialog provided the host operating system. This allows users to share text, links, and other content to an arbitrary destination of their choice, such as apps or contacts.

Now just to get Share Target API and we are on to a winner! :)

Offline fallback page with service worker — ⭐

Years ago, I did some research into how native applications responded to a lack of network connectivity. Whilst I’ve lost the link to the analysis (I could swear it was on Google+), the overarching narrative was that many native applications are inextricably tied to the internet that they just straight up refuse to function. Sounds like a lot of web apps, the thing that set them apart from the web though is that the experience was still ‘on-brand’, Bart Simpson would tell you that you need to be online (for example), and yet for the vast majority of web experiences you get a ‘Dino’ (see chrome://dino).

We’ve been working on Service Worker for a long time now, and whilst we are seeing more and more sites have pages controlled by a Service Worker, the vast majority of sites don’t even have a basic fallback experience when the network is not available.

I asked my good chum Jake if we have any guindance on how to build a generic fall-back page on the assumption that you don’t want to create an entirely offline-first experience, and within 10 minutes he had created it. Check it out.

For brevity, I have pasted the code in below because it is only about 20 lines long. It caches the offline assets, and then for every fetch that is a ‘navigation’ fetch it will see if it errors (because of the network) and then render the offline page in place of the original content.

addEventListener('install', (event) => {

event.waitUntil(async function() {

const cache = await caches.open('static-v1');

await cache.addAll(['offline.html', 'styles.css']);

}());

});

// See https://developers.google.com/web/updates/2017/02/navigation-preload#activating_navigation_preload

addEventListener('activate', event => {

event.waitUntil(async function() {

// Feature-detect

if (self.registration.navigationPreload) {

// Enable navigation preloads!

await self.registration.navigationPreload.enable();

}

}());

});

addEventListener('fetch', (event) => {

const { request } = event;

// Always bypass for range requests, due to browser bugs

if (request.headers.has('range')) return;

event.respondWith(async function() {

// Try to get from the cache:

const cachedResponse = await caches.match(request);

if (cachedResponse) return cachedResponse;

try {

// See https://developers.google.com/web/updates/2017/02/navigation-preload#using_the_preloaded_response

const response = await event.preloadResponse;

if (response) return response;

// Otherwise, get from the network

return await fetch(request);

} catch (err) {

// If this was a navigation, show the offline page:

if (request.mode === 'navigate') {

return caches.match('offline.html');

}

// Otherwise throw

throw err;

}

}());

});

That is all. When the user is online they will see the default experience.

And when the user is offline, they will get the fallback page.

I find this simple script incredibly powerful, and yes, whilst it can still be improved, I do believe that even just a simple change in the way that we speak to our users when there is an issue with the network has the ability to fundamentally improve the perception of the web for users all across the globe.

Update Jeffrey Posnick kinldy reminded me about using Navigation Preload to not have to wait on SW boot for all requests, this is especially important if you are only controlling failed network requests.

testing block image upload

This is just a test to see if I got the image upload right. If you see this, then yes I did :)

Editor.js — ⭐

I’ve updated by Hugo based editor to try and use EditorJS as, well, the editor for the blog.

Workspace in classic editors is made of a single contenteditable element, used to create different HTML markups. Editor.js workspace consists of separate Blocks: paragraphs, headings, images, lists, quotes, etc. Each of them is an independent contenteditable element (or more complex structure) provided by Plugin and united by Editor’s Core.

I think it works.

I struggled a little bit with the codebase, the examples all use ES Modules, however the NPM dist is all output in IIFE ES5 code. But once I got over that hurdle it has been quite easy to build a UI that looks a bit more like medium.

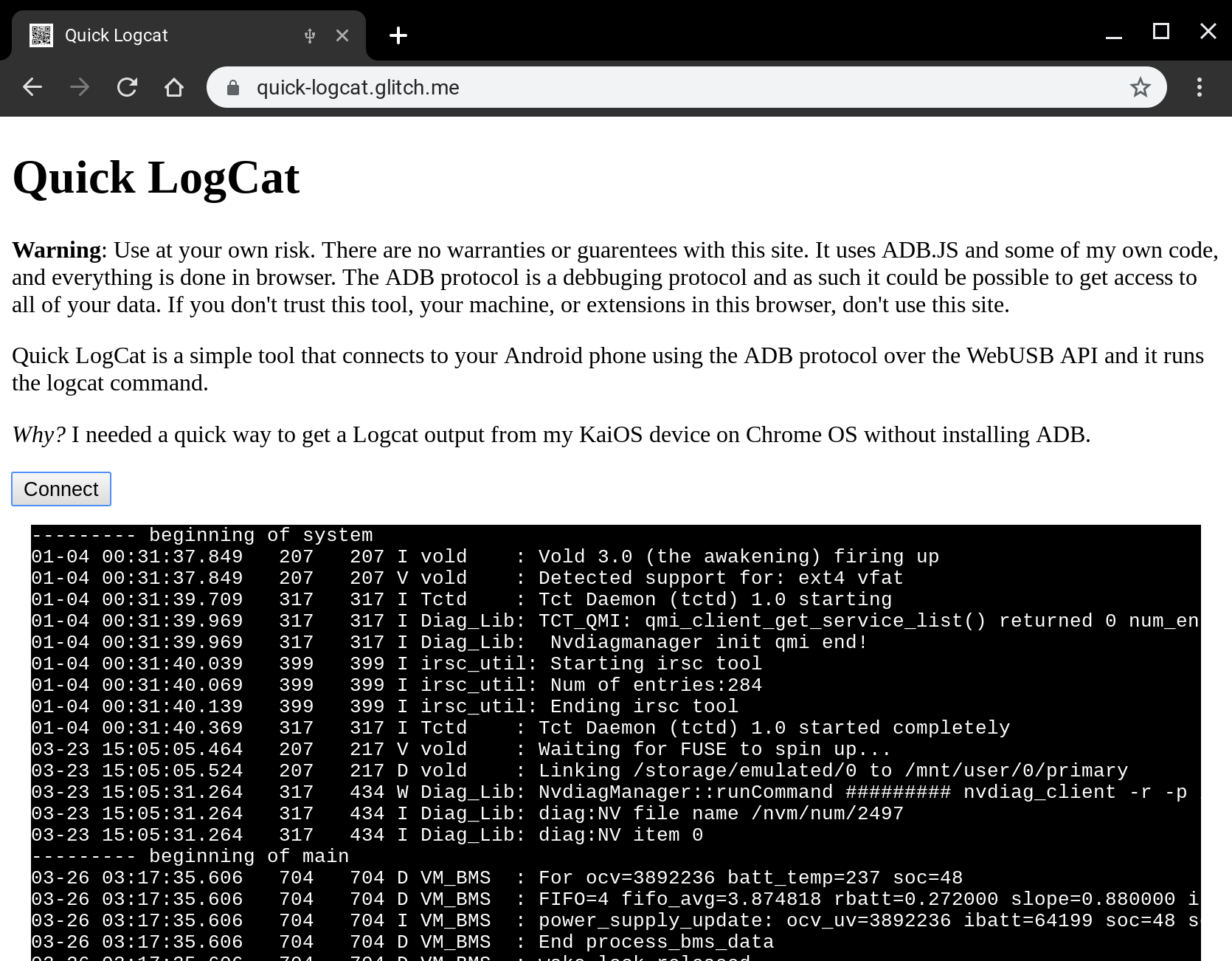

Quick Logcat - debugging android from the web — ⭐

I was on the flight to Delhi this last week and I wanted to be able to debug my KaiOS device with Chrome OS - I never quite got to the level that I needed for a number of reasons (port forwarding didn’t work - more on that in another post), but I did get to build a simple tool that really helps me build for the web on Android based devices.

I’ve been using WebADB.js for a couple of side projects, but I thought I would at least release one of the tools I made last week that will help you if you ever need to debug your Android device and you don’t have adb installed or any other Android system tools.

Quick Logcat is just that. It can connect to any Android device that is in developer mode and has USB enabled, is connected to your machine over USB and most importantly you grant access from the web page to connect to the device, and once that is all done it just runs adb shell logcat to create the following output.

Checkout the source over on my github account, specifically the logger class that has the brunt of my logic - note a lot of this code is incredibly similar to the demo over at webadb.github.io, but it should hopefully be relatively clear to follow how I interface with the WebUSB API (which is very cool). The result is the following code that is in my index file: I instantiate a controller, connect to the device which will open up the USB port and then I start the logcat process and well, cat the log, via logcat.

It even uses .mjs files :D

<script type="module">

import LogcatController from "/scripts/main.mjs";

onload = () => {

const connect = document.getElementById("connect");

const output = document.getElementById("output");

let controller = new LogcatController();

connect.addEventListener("click", async () => {

await controller.connect();

controller.logcat((log) => {

output.innerText += log;

})

});

};

</script>ADB is an incredibly powerful protocol, you can read system files, you can write over personal data and you can even easily side-load apps, so if you give access to any external site to your Android device, you need to completely trust the operator of the site.

This demo shows the power and capability of the WebUSB API, we can interface with hardware without any natively installed components, drivers or software and with a pervasive explicit user opt-in model that stops drive-by access to USB components.

I’ve got a couple more ideas up my sleeve, it will totally be possible to do firmware updates via the web if you so choose. One thing we saw a lot of in India was the ability side-load APK’s on to user’s new phones, whilst I am not saying we must do it, a clean web-interface would be more more preferable to the software people use today.

What do you think you could build with Web USB and adb access?

Debugging Web Pages on the Nokia 8110 with KaiOS

We’ve been doing a lot of development on feature phones recently and it’s been hard, but fun. The hardest bit is that on KaiOS we found it impossible to debug web pages, especially on the hardware that we had (The Nokia 8110). The Nokia is a great device, it’s built with KaiOS which we know is based on something akin to Firefox 48, but it’s locked down, there is no traditional developer mode like you get on other Android devices, which means you can’t connect Firefox’s WebIDE easily.

Object Detection and Augmentation — ⭐

I’ve been playing around a lot with the Shape Detection

API in Chrome a lot and I really

like the potential it has, for example a very simple QRCode

detector I wrote a long time ago has a JS polyfill, but

uses new BarcodeDetector() API if it is available.

You can see some of the other demo’s I’ve built here using the other capabilities of the shape detection API: Face Detection,Barcode Detection and Text Detection.

I was pleasantly surprised when I stumbled across Jeeliz

at the weekend and I was incredibly impressed at the performance of their

toolkit - granted I was using a Pixel3 XL, but detection of faces seemed

significantly quicker than what is possible with the FaceDetector API.

It got me thinking a lot. This toolkit for Object Detection (and ones like it) use API’s that are broadly available on the Web specifically Camera access, WebGL and WASM, which unlike Chrome’s Shape Detection API (which is only in Chrome and not consistent across all platforms that Chrome is on) can be used to build rich experiences easily and reach billions of users with a consistent experience across all platforms.

Augmentation is where it gets interesting (and really what I wanted to show off in this post) and where you need middleware libraries that are now coming to the platform, we can build the fun snapchat-esque face filter apps without having users install MASSIVE apps that harvest huge amount of data from the users device (because there is no underlying access to the system).

Outside of the fun demos, it’s possible to solve very advanced use-cases quickly and simply for the user, such as:

- Text Selection directly from the camera or photo from the user

- Live translation of languages from the camera

- Inline QRCode detection so people don’t have to open WeChat all the time :)

- Auto extract website URLs or address from an image

- Credit card detection and number extraction (get users signing up to your site quicker)

- Visual product search in your store’s web app.

- Barcode lookup for more product details in your stores web app.

- Quick cropping of profile photos on to people’s faces.

- Simple A11Y features to let the a user hear the text found in images.

I just spent 5 minutes thinking about these use-cases — I know there are a lot more — but it hit me that we don’t see a lot of sites or web apps utilising the camera, instead we see a lot of sites asking their users to download an app, and I don’t think we need to do that any more.

Update Thomas Steiner on our team mentioned in our team Chat that it sounds

like I don’t like the current ShapeDetection API. I love the fact that this

API gives us access to the native shipping implementations of the each of the

respective systems, however as I wrote in The Lumpy Web, Web

Developers crave consistency in the platform and there are number of issues with

the Shape Detection API that can be summarized as:

- The API is only in Chrome

- The API in Chrome is vastly different on every platforms because their

underlying implementations are different. Android only has points for

landmarks such as mouth and eyes, where macOS has outlines. On Android the

TextDetectorreturns the detected text, where as on macOS it returns a ‘Text Presence’ indicator… This is not to mention all the bugs that Surma found.

The web as a platform for distribution makes so much sense for experiences like these that I think it would be remiss of us not to do it, but the above two groupings of issues leads me to question the long-term need to implement every feature on the web platform natively, when we could implement good solutions in a package that is shipped using the features of the platform today like WebGL, WASM and in the future Web GPU.

Anyway, I love the fact that we can do this on the web and I am looking forwards to seeing sites ship with them.

Got web performance problems? Just wait... — ⭐

I saw a tweet by a good chum and colleague, Mariko, about testing on a range of low end devices keeping you really grounded.

The context of the tweet is that we are looking at what Web Development is like when building for users who live daily on these classes of devices.

The team is doing a lot of work now in this space, but I spent a day build a site and it was incredibly hard to make anything work at a even slightly reasonable level of performances - here are some of the problems that I ran into:

- Viewport oddities, and mysterious re-introduction of 300ms click-delay (can work around).

- Huge repaints of entire screen, and it’s slow.

- Network is slow

- Memory is constrained, and subsequent GC’s lock the main thread for multiple seconds

- Incredibly slow JS execution

- DOM manipulation is slow

For many of the pages I was building, even on a fast wifi connection pages took multiple seconds to load, and subsequent interactions were just plain slow. It was hard, it involved trying to get as much as possible off the main thread, but it was also incredibly gratifying at a technical level to see changes in algorithms and logic that I wouldn’t have done for all my traditional web development, yield large improvements in performance.

I am not sure what to do long-term, I suspect a huge swathe of developers that we work with in the more developed markets will have a reaction ‘I am not building sites for users in [insert country x]‘, and at a high-level it’s hard to argue with this statement, but I can’t ignore the fact that 10’s of millions of new users are coming to computing each year and they will be using these devices and we want the web to be the platform of choice for content and apps lest we are happy with the rise of the meta platform.

We’re going to need to keep pushing on performance for a long time to come. We will keep creating tools and guidance to help developers load quickly and have smooth user interfaces :)

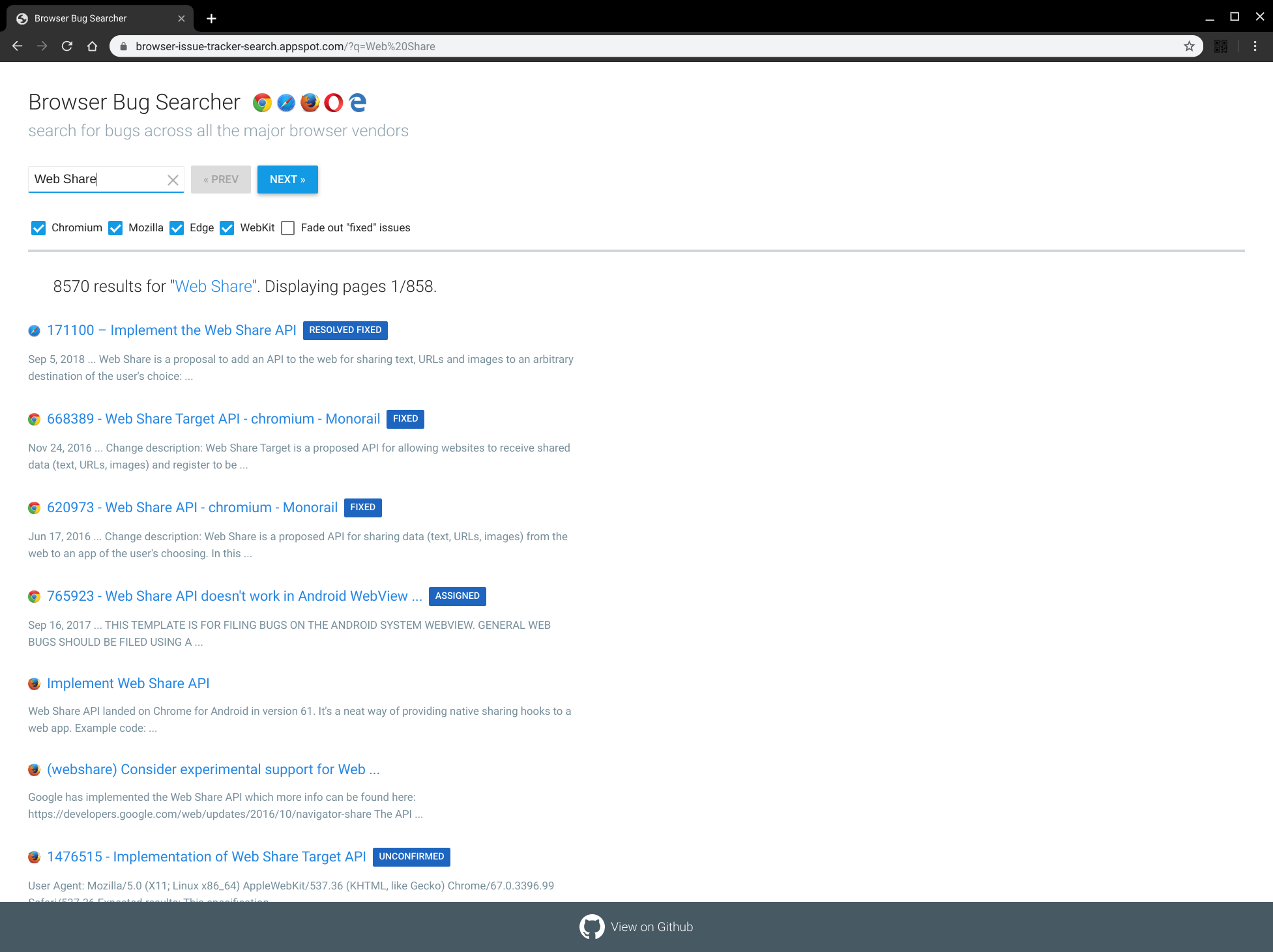

Browser Bug Searcher — ⭐

I was just reflecting on some of the work our team has done and I found a project from 2017 that Robert Nyman and Eric Bidelman created. Browser Bug Searcher!.

It’s incredible that with just a few key presses you have a great overview of your favourite features across all the major browser engines.

This actually highlights one of the issues that I have with crbug and webkit bug trackers, they don’t have a simple way to get feeds of data in formats like RSS. I would love to be able to use my topicdeck aggregator with bug categories etc so I have a dashboard of all the things that I am interested in based on the latest information from each of the bug trackers.

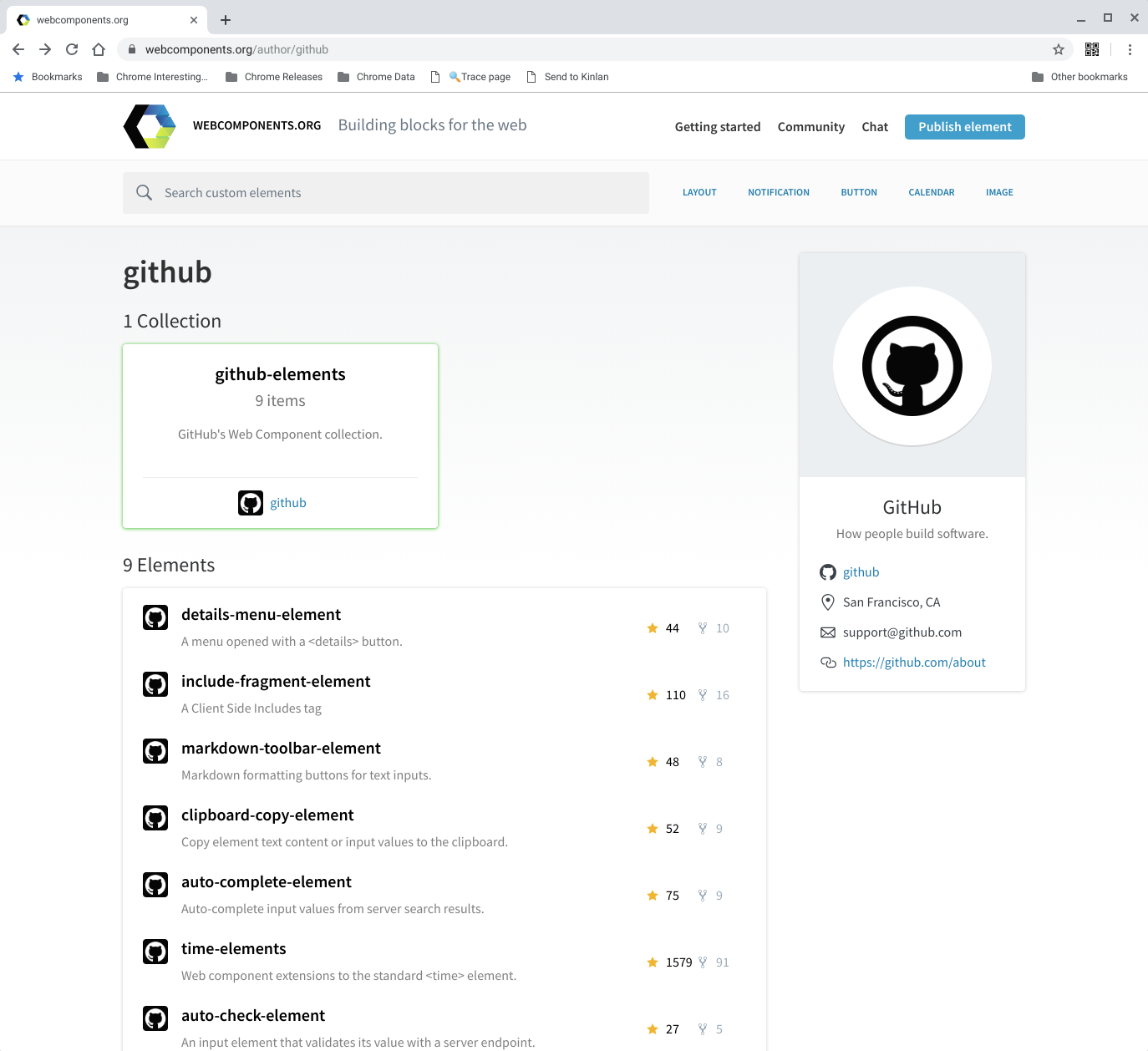

Github's Web Components — ⭐

I was looking for a quick markdown editor on https://www.webcomponents.org/ so that I can make posting to this blog easier and I stumbled across a neat set of components by github.

I knew that they had the <time-element> but I didn’t know they had a such a nice and simple set of useful elements.

London from Kingscross

Looks kinda nice today.

The GDPR mess

The way we (as an industry) implement GDPR consent is a mess. I’m not sure why anyone would choose anything other than ‘Use necessary cookies only’, however I really can’t tell the difference between either option and the trade-off of either choice, not to mention I can verify that it is only using necessary cookies only.

Brexit: History will judge us all — ⭐

History will judge us all on this mess, and I hope it will be a case study for all on the effects of nationalism, self-interests, colonial-hubris, celebrity-bafoonery.

Fuckers.